Practical Machine Learning with LightGBM and Python

LightGBM, a gradient boosting framework developed by Microsoft, has gained immense popularity in the machine learning community due to its speed and efficiency. It is particularly effective for large datasets, enabling practitioners to achieve higher performance with fewer resources. This article explores the practical applications of LightGBM using Python, detailing how to install the library and implement it for various machine learning tasks. We will also cover best practices for tuning LightGBM models to optimize their performance. Additionally, we will provide sample code and datasets to facilitate hands-on learning. Finally, we will discuss common pitfalls and how to avoid them when working with LightGBM.

Introduction to LightGBM

LightGBM (Light Gradient Boosting Machine) is an open-source, distributed, high-performance implementation of gradient boosting. It is designed to be efficient in both memory usage and computation speed, making it suitable for large-scale machine learning tasks. Unlike traditional gradient boosting methods, LightGBM uses a histogram-based approach, which allows it to handle continuous and categorical features more effectively.

Installing LightGBM

To get started with LightGBM in Python, you need to install the library. You can easily install LightGBM via pip. Open your terminal or command prompt and run the following command:

bashCopy codepip install lightgbm

Additionally, ensure you have the required dependencies installed, such as NumPy and scikit-learn:

bashCopy codepip install numpy scikit-learn

Practical Implementation

Once you have installed LightGBM, you can start implementing it in your machine learning projects. Below is a practical example that illustrates how to use LightGBM for a classification task using the popular Iris dataset.

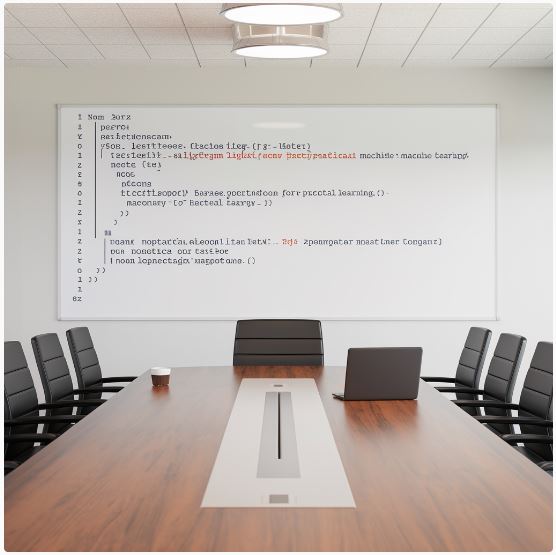

Sample Code

pythonCopy codeimport lightgbm as lgb

import numpy as np

import pandas as pd

from sklearn.datasets import load_iris

from sklearn.model_selection import train_test_split

from sklearn.metrics import accuracy_score

# Load dataset

iris = load_iris()

X = iris.data

y = iris.target

# Split the dataset into training and test sets

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

# Create a LightGBM dataset

train_data = lgb.Dataset(X_train, label=y_train)

# Set parameters for LightGBM

params = {

'objective': 'multiclass',

'num_class': 3,

'metric': 'multi_logloss',

'learning_rate': 0.1,

'num_leaves': 31,

'max_depth': -1,

'verbose': -1

}

# Train the model

model = lgb.train(params, train_data, num_boost_round=100)

# Make predictions

y_pred = model.predict(X_test)

y_pred_max = np.argmax(y_pred, axis=1)

# Calculate accuracy

accuracy = accuracy_score(y_test, y_pred_max)

print(f'Accuracy: {accuracy:.2f}')

Hyperparameter Tuning

To achieve optimal performance, hyperparameter tuning is crucial. Some essential parameters to consider when tuning your LightGBM model include:

- num_leaves: The number of leaves in one tree. Increasing this number can increase model complexity and improve performance but may lead to overfitting.

- max_depth: The maximum depth of the tree. Limiting the depth can help to prevent overfitting.

- learning_rate: This parameter controls how quickly the model adapts to the problem. A lower learning rate typically yields better performance but requires more boosting rounds.

- n_estimators: The number of boosting iterations. More iterations can lead to better performance but also increase computation time.

Using tools like Grid Search or Random Search can help find the optimal combination of these parameters.

Common Pitfalls

When working with LightGBM, consider the following pitfalls:

- Overfitting: Monitor the model’s performance on validation data to prevent overfitting.

- Data Leakage: Ensure that the training and test datasets are appropriately separated to avoid contamination.

- Feature Importance: Regularly check feature importance to understand which features contribute most to the model’s performance.

Conclusion

LightGBM is a powerful tool for practitioners looking to implement machine learning models efficiently. Its speed, scalability, and performance make it suitable for various applications. By following the best practices outlined in this article, you can harness the full potential of LightGBM in your projects.

Suggested Reading

To further enhance your understanding of LightGBM and machine learning, consider exploring the following topics:

- Gradient Boosting vs. Random Forests: Understand the differences and use cases for each method.

- Hyperparameter Optimization Techniques: Learn more about advanced techniques for optimizing model performance.

- Feature Engineering: Explore methods to create and select features that improve model performance.

- Model Evaluation Metrics: Gain insights into various metrics used to evaluate model performance, including precision, recall, and F1-score.