Mastering transformation learning center with ML

Transformers are a novel class of neural networks first proposed in the seminal 2017 paper “Attention is all you need”, by Vaswani et al. They are a major breakthrough in artificial intelligence, especially those related to natural language processing and machine learning. Transformers are different than year olden days sequential GRUs and LSTMs because they process all the data being passed to them at once, via a self attention sequence mechanism which allows for simultaneous processing of an entire input stream in one go – capturing very complex dependencies and context with maximum efficiency. This has generated models such as GPT4, which can perform multiple language translation and fully understand natural text automatically. While these models are of course immensely influential, the students experience a great deal of trouble understanding how transformer models actually work. Their architecture is too complex — one multi-head attention mechanism, positional encodings, and complicated feed-forward networks. Furthermore, there is a level of mathematical backing and hyperparameter tuning needed prior to effective implementation. These are only magnified by an ever increasing rate of progress in the field that leaves learners struggling to keep up. Our center aims to tackle these challenges via an in-depth set of resources that make transformer models accessible. Our custom workshops, interactive tutorials and expert-led sessions are designed to demystify the complex ideas by breaking them down into simple easy-to-understand parts. Our center is focused on providing comprehensive courses that will prepare the students with practical approach between theory and real-time applications, which equip them to take transform-ic leaps in leveraging this marvel transformer technology conveyor.

Core Concepts of Transformers

Attention mechanism

Attention Is All You NeedTransformer learning is an immunizing data model which extends applications of deep-learning, simply on text) Data modeling with top-accuracy_rate single-shot attention mechanisms for sequence processing and generation. While traditional models move through sequences of data in a sequential fashion, the attention mechanism additionally gives transformers overight on all elements at once and thereby provides significant strength to learn complex relationships between words.

The attention mechanism is essentially a score of how much each part of the input data compared to other parts. It accomplishes this in a method called self-attention, the place parts of the sequence are given totally different ranges of focus or “attention”. For example, when tokaing a sentence, the model can evaluate how each word is associated with all other words and hence it will be able to process context with further understanding which in turn leads into generating more coherent outputs based on the proper contexts. Query, Key and Value (Q,K,V) Query is implemented with a dot product attention mechanism that takes in the same weights as of embedding matrix. Query Matrix – WqKeys Matrices- Queries are run against Keys to get our Attention Score. Each object in the sequence is then mapped into these three tensors via learnt linear projections. This in turn generate the attention scores which are derived by taking a skip-gram type of text generation with many tokens as input, that is to say multiplying key vectors by query vector and applying “softmax” on them. This yields a series of attention weight wherein each word is told how much focus this particular one should get. The output of the attention layer is obtained by taking a weighted sum of these value vectors after weights are applied to them.

Attention Is All You Need The Transformer architecture is instead based on a mechanism which pulls the entire model into attention in one go, allowing each part to speak directly to every other part of that layer through query-key-value-type interactions On top of this a number n > 1 self-attention heads are used and ran all at once – though still single threaded) these n parallel models can simulate different patterns relationships or dependencies between data points within our input sequence. Merged with positional encodings to encode the position of words in a sequence, this multi-head attention mechanism helps transformers better model long-range dependencies and context than previous approaches.

Encoder-decoder architecture

Encoder

The job of the encoder is to take an input sequence and compress it into fixed-size context representation that can be used by decoder, which understands all information about generating output sequence. In most encoders, the input sequence is run through layers of one variety or another (either recurrent like LSTMs and GRUs, or in transformers’ case: self-attention).

1. Operation: words are changed into long vectors and the relations or correlations between them in a section of 350 tokens, which is not possible to compute without changing everything into dense vectors, we learn. In transformers, this manifest as positional encodings and several layers of self-attention and feed-forward networks. To do this, it iteratively refines these representations through its layers in order to learn a useful understanding of the input data.

2. Context Representation : The encoder processes the input sequence, one element at a time, and outputs a set of vectors (one for each element in the sequence) that represent – ghh-conc-t-v1-the contextg-ghh -concsepconch-v5-of all elements used to generate it. In transformers this context is modeled using a list of embeddings for each position in the input sequence.

Decoder

The decoder then takes the context representation that was created by the encoder and uses it to produce an output sequence. It works token-by-token, influenced by both the context that was given to its encoder and all of tokens generated until this step.

1. Attention Mechanism: As we know in transformers, on the decoder side it is a mixture of self and cross attention mechanism. Since each decoders has self-attention, the decoder can pay attention to different parts of the generated sequence; and with cross-attention allows it to align every current output token generation by context from Encoder.

2. Generation: The decoder uses the context provided and previously generated tokens to output each token of an output sequence. The end result tokens are produced through a number of layers that apply attention mechanisms and feed-forward networks.

Significance

The most powerful of these dependencies for complex transformations over sequences are the encoder-decoder architectures as they de-couple, to a large extent now encoding and decoding. The separation enables us to easily deal with input/output sequences of different lengths, giving rise to the ability for learning intricate mappings from one sequence space to another.

Scalability: The architecture can scale better for larger sequences, and the use of attention mechanism as in transformers mitigates drawbacks from traditional recurrent methods.

Versatility: It is used in different tasks apart from NLP, like image captioning where the encoder might process images features and decoder produces descriptive text

Examples of transformer applications (NLP, computer vision)

Transformers have transformed almost all fields and they are widely popular in Natural Language Processing (NLP) as well as Computer Vison. Transformers are the underpinnings of models like GPT-4 and BERT which themselves excels at text generation, machine translation sentiment analysis etc in Natural Language Processing (NLP), These models use self-attention mechanisms from transformers to process and produce coherent human-like text with high context awareness. In the computer vision domain, transformers have not been left far behind with architectures such as Vision Transformers (ViTs) being very successful in tasks like image classification/object detection/segmentation. Transformers in vision have dramatically improved object recognition, usually on par with or even better than convolutional neural networks, by treating image patches as sequences – a clear testament to both their applicability and effectiveness across disparate data structures.

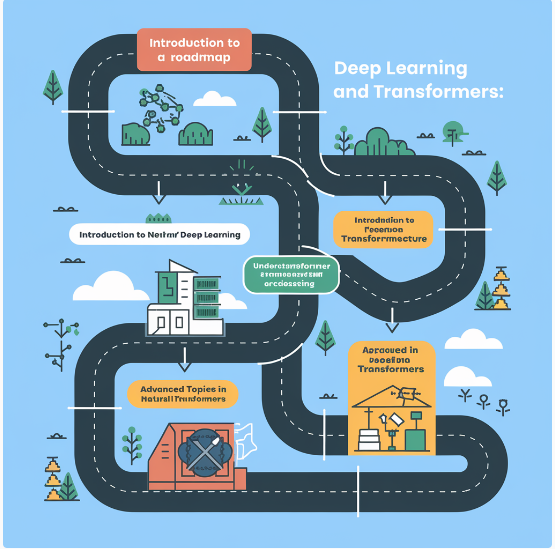

Center of Learning for Multipolar Transformer

Courses and Curriculum: Our center hosts a range of transformer learning curriculum from introduction to advanced applications. The courses range from “Introduction to Transformers,” “Advanced NLP with Transformations,” and “Transformers in Computer Vision” targeted at equipping students with an in-depth understanding of the theoretical principles as well as applying them.

- Teaching Methodologies: Hands-on learning is the name of our game, as we make use interactive projects and practical exercises to demonstrate concepts. Students learn through hands-on concrete examples of building and tweaking transformer models on realistic use cases What we do is to ensure this theoretical understanding translates into practice.

- Instructor Optimism: Our instructors have worked in the industry, and their academic backup is just a cherry on top. With years of Boots-on-the-Ground experience in research and commercial applications, they share their latest developments as well a point-of-view on applying the theoretical into practice!

- As learning resources, be it range of curated datasets to essential code libraries through latest tools are provided by us. For students, this means you have access to the same kinds of tools and datasets used by industry researchers; pre-processed versions of these datasets are included with example code that makes it easy to experiment with state-of-the-art transformer models using TensorFlow and PyTorch.

Key Points to Learn Transformers:

- Better Comprehension of the Best Models : Learning transformers, it opens up many gems about newest ML models around GPT-4 and BERT to Vision Transformers that are ruling AI ambitions with in hotspot areas.

- Transferability: Since transformers can be used across different domains (from natural language processing to computer vision), students could use this newly-acquired skill set in various tasks like text generation, translation, image classification and so on.

- Better Model Performance: With more deeper understanding of the internal working of transformer, it allows you to build robust high performing models and able to handle complex data patterns, long range dependencies than conventional Arch.

- Basics for Advanced Research: Having a good understanding of transformers enables some key components in researching and innovating more advanced topics in AI such as attention mechanisms, pre-training methodologies, transfer learning which ultimately thesis/academic contributions to cutting-edge research.

- So, knowing transformers will make you more valuable in the AI and machine learning job market; hence, it gives an edge to your career profile for its employability benefits that can help further develop a tech-driven industry.

- Improved Problem-Solving Skills: It encourages deeper critical thinking and enhancing the approach to handle challenges such as model optimization, hyperparameter tuning, dealing with large-scale data.

- A Thriving Community: Learn transformers, and you enter a hive of researchers building things – all excited to share their knowledge with hordes awaiting collaborations and openings in our journeymen.

- Note: Practical Exposure of State-Of-The-Art Tools – Experience with tools and frameworks employed in transformer models (E.g. TensorFlow, PyTorch & Hugging Face) gears you up for practical application deployment into real-life situations

Conclusion:

In short, learning transformers is the key ingredient to understanding state-of-the-art AI in areas like NLP and Computer Vision. Mastering this paradigm-shifting architecture allows you to elevate the abilities of your models, access new types of problems in AI work and sit at the vanguard as an AI innovator. The year hosted a variety of initiatives to our center as we continue further on transforming with specific operating commitment around providing the tools, additional support and practical application required for making more complete use of transformers; so that meaningful advances can be made in impact-generating work.