PyTorch Lightning Efficiently Handling Multiple Train Loaders

PyTorch Lightning is a high-performance framework designed to simplify the process of training deep neural networks. One of its powerful features is the ability to handle multiple train loaders simultaneously. This is particularly useful for scenarios where you have different datasets or want to implement complex training strategies.

Understanding Train Loaders

A train loader in PyTorch is an iterator that provides batches of data from a dataset. It’s essential for feeding data to your neural network during training. By using multiple train loaders, you can effectively manage different datasets or create specialized training regimes.

Why Use Multiple Train Loaders?

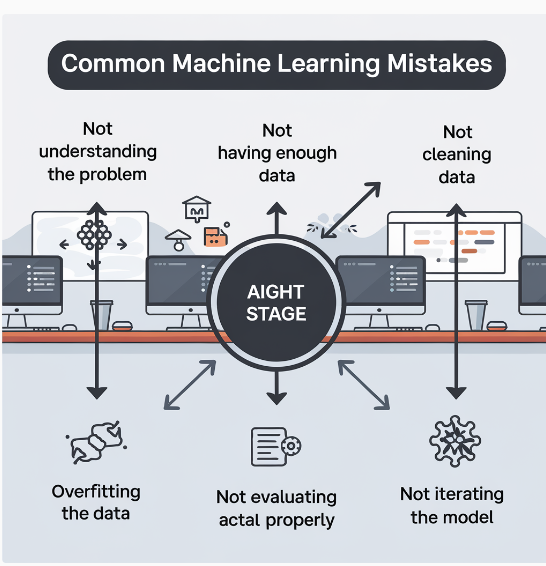

- Different Datasets: If you have multiple datasets with varying characteristics (e.g., image and text data), using separate train loaders allows you to tailor your training process accordingly.

- Data Augmentation: You can apply different augmentation techniques to each train loader, enhancing the diversity of your training data and improving model generalization.

- Custom Training Strategies: Multiple train loaders can be used to implement advanced training strategies like curriculum learning or adversarial training.

- Imbalanced Datasets: By using separate train loaders for different classes or subsets of your data, you can address class imbalance issues.

Implementing Multiple Train Loaders in PyTorch Lightning

To use multiple train loaders in PyTorch Lightning, you need to define a custom DataModule class. This class handles the data loading and preprocessing tasks. Here’s a basic example:

Python

import pytorch_lightning as pl

from torch.utils.data import DataLoader

class MyDataModule(pl.LightningDataModule):

def __init__(self, data_dir):

super().__init__()

self.data_dir = data_dir

def setup(self, stage: Optional[str] = None):

# Load your datasets here

dataset1 = Dataset1(self.data_dir)

dataset2 = Dataset2(self.data_dir)

def train_dataloader(self):

train_loader1 = DataLoader(dataset1, batch_size=32, num_workers=4)

train_loader2 = DataLoader(dataset2, batch_size=32, num_workers=4)

return [train_loader1, train_loader2]

# ... other methods like val_dataloader, test_dataloader

Use code with caution.

In the train_dataloader method, you return a list of train loaders. PyTorch Lightning will automatically iterate through these loaders during training.

Training with Multiple Train Loaders

Once you have defined your DataModule, you can use it in your LightningModule class to train your model. Here’s a simplified example:

Python

class MyModel(pl.LightningModule):

# ... your model definition

def training_step(self, batch, batch_idx):

# Access the data from the current batch

data, target = batch

# ... your training logic

Use code with caution.

PyTorch Lightning will automatically handle switching between the train loaders during training.

Additional Considerations

- Batch Size and Num Workers: Adjust the

batch_sizeandnum_workersparameters in yourDataLoaderinstances to optimize performance based on your hardware and dataset size. - Data Preprocessing: Ensure that your data preprocessing steps are consistent across all train loaders to avoid inconsistencies.

- Custom Training Strategies: Explore advanced training strategies like curriculum learning or adversarial training to leverage the power of multiple train loaders.

Conclusion

By effectively using multiple train loaders in PyTorch Lightning, you can enhance the flexibility and performance of your deep learning models. This technique is particularly valuable for handling diverse datasets, implementing complex training strategies, and addressing challenges like class imbalance.